Why GPT works

GPT == DNN == approximation

Deep learning is a hot topic in recent years, there are a lot of articles explaining its principle in mathematics.

OpenAI released language generator GPT-3 shows us artificial intelligence in deep learning can behave in similar way as humans do. It can ask question, answer question and write articles that make us believe it will pass the turing test.

Now a question pops up

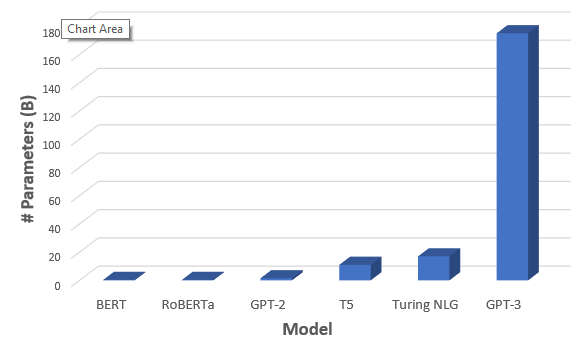

170 billion parameters == human brain??

Some people ask.

The Answer

No.

Well, it behaves like human brain but it is not the same.

To explain why it works, we need to know GPT is using deep learning technology which do approximations. So what does GPT-3 approximates to?

Remember earlier we talked about how game theory works? Game thory approximates the choice of action without consider the complex thinking inside human brain. Now, what does GPTs approximate to? Choice of words.

Choice of words?

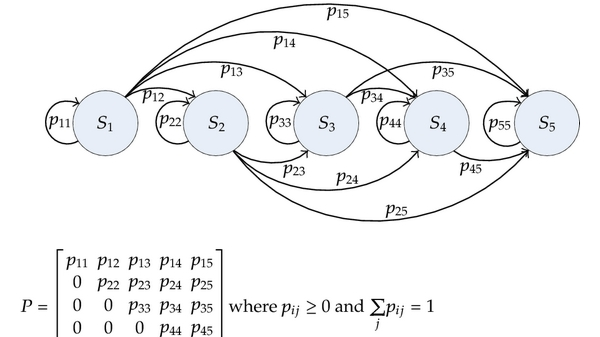

That reminds us of Markov Chain.

It behaves and works in a similar way. except there is no “word” for GPT-3, words are mapped into vectors. Thanks to Word2Vec, words are transformed into vectors in continuous latent space.

With the latent space, GPT-3 can have more than one direction for the choice of words while Markov chain is in single direction.

GPT-3 do not know what it says

Some one gave GPT-3 a turing test.

We can see that GPT-3 is capable of organizing words in a way humans do, but it does not think with logics. Not today I guess.

Short conclusion

Texts are formed with symbols.

Symbols used in text are limited.

GPTs try to approximate the choice of words when presenting idea.

The idea is in the form of other texts composed with countable set of symbols.

Which means it does transform from text to another. I guess this is why language models are also called “transformer”.

It is similar to game theory, we simplify human behaviour with matrices and payoffs as whatever complex one thinks, there are limited actions one can take in the game. So we can simplify the thinking and approximate human behaviour.

And by the way, people don’t really think much in organizing words, so it is easy to do approximation. ;)

More about GPT-3 in the view of deep learning: How GPT-3 Works @jalammar

Grammar of this article was checked by the “transformers”, many thanks to them: