YoloV5 Wheelchair detector

YoloV5 is a object detection model implemented with Pytorch and inspired by Darknet YOLO models, which is not officially a member of YOLO family. I am interested in trying it, and the detectors I found on the home page of the mobility aids dataset are mainly R-CNN based models, therefore I decided to do it in different approach with YoloV5.

⚠ This dataset is provided for research purposes only. Any commercial use is prohibited.

1 | @INPROCEEDINGS{vasquez17ecmr, |

Download YoloV5

You can find YoloV5 here: https://github.com/ultralytics/yolov5

You may clone the source code like me

1 | git clone https://github.com/ultralytics/yolov5.git |

Or you can use the docker image which includes the source code

1 | docker run --gpus all --rm -v "$(pwd)":/root/runs --ipc=host -it ultralytics/yolov5:latest |

I don’t keep a lots of docker containers, so I added --rm to remove the container after I quit it. You may want to keep the container for more training later, then remove the option --rm from the above line.

I also mounted current working directory into /root/runs with option -v "$(pwd)":/root/runs so the training/inference result will output to current working directory outside the docker container. You may change it base on your needs.

For more help on using docker, read Docker run reference.

Download Dataset

I saw a folder called data in the root path of the YoloV5 project, and I decided to use it for dataset. You may save the dataset to anywhere you like, but remember to change my code converting Pascal format annotation to Yolo format annotation.

Now I assume you are in the docker container. Change directory into the data/ folder under the YoloV5 project.

1 | cd data |

Download images and unzip to images/train/ then remove the zip file

1 | curl -L http://mobility-aids.informatik.uni-freiburg.de/dataset/Images_RGB.zip -o Images_RGB.zip |

Download labels (training) and unzip to labels/train then remove the zip file

1 | curl -L http://mobility-aids.informatik.uni-freiburg.de/dataset/Annotations_RGB.zip -o Annotations_RGB.zip |

Download labels (testing) and unzip to labels/test then remove the zip file

1 | curl -L http://mobility-aids.informatik.uni-freiburg.de/dataset/Annotations_RGB_TestSet2.zip -o Annotations_RGB_TestSet2.zip |

You may also download it with browser from the home page of the mobility aids dataset if you are not using docker or mounting a directory for dataset.

Prepare dataset

Dataset split

We need to split the images into training and testing sets. I wrote a script for this base on the name of labels to find the images of training set labels and testing set labels.

As you were in data/ directory, type python and paste the following script should do the job. Or you may also create a python script file and paste the script into it and then run the script.

1 | import os |

Now we have the images ready.

Label conversion

The labels are YAML format, we need to convert it into text file with label center_x center_y width height. My script for doing this requires modules yaml for parsing YAML files and tqdm to see the progress.

Install script dependencies

1 | pip install yaml tqdm |

Here you go my script, convert the YAML to text file and remove YAML file

1 | import os |

You may want to verify the name of labels in YAML file before you run the script and remove the YAML files. Just in case

labels = ['person', 'wheelchair', 'push_wheelchair', 'crutches', 'walking_frame']does not match with the name of objects in YAML files and make your model useless.

Add dataset to YoloV5

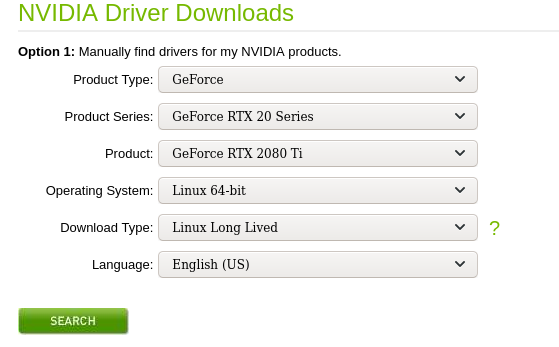

As you may see the commmand to start training of YoloV5 look like this: python train.py --data voc.yaml

That means there is a file called voc.yaml in data/ directory contain the path of images and labels. Therefore to add our dataset, we need to create a YAML file in data/.

If you follow my instructions then the path should be the same as mine, otherwise you should edit the path to your dataset.

1 | # Mobility Aids Dataset |

Now you should be ready for training.

Train

1 | python train.py --data mobility_aids.yaml |

Now… Wait…

After few hours, I finished the training on 2080 Ti.

I forgot to record the result, I guess it was around 0.8 for mAP. Anyway it does not work well for videos of wheelchair on street. I guess it is because dataset was indoor environment, does not fit with outdoor environment = My bad.